Tutorial: Generative QA with Retrieval-Augmented Generation

Last Updated: November 24, 2022

While extractive QA highlights the span of text that answers a query, generative QA can return a novel text answer that it has composed. In this tutorial, you will learn how to set up a generative system using the RAG model which conditions the answer generator on a set of retrieved documents.

Prepare environment

Colab: Enable the GPU runtime

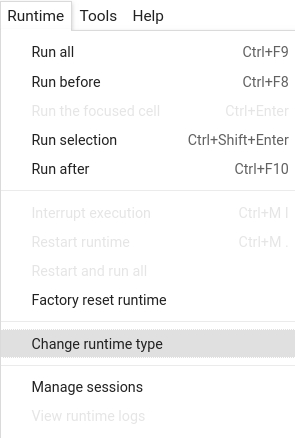

Make sure you enable the GPU runtime to experience decent speed in this tutorial. Runtime -> Change Runtime type -> Hardware accelerator -> GPU

You can double check whether the GPU runtime is enabled with the following command:

%%bash

nvidia-smi

To start, install the latest release of Haystack with pip:

%%bash

pip install --upgrade pip

pip install git+https://github.com/deepset-ai/haystack.git#egg=farm-haystack[colab,faiss]

Logging

We configure how logging messages should be displayed and which log level should be used before importing Haystack. Example log message: INFO - haystack.utils.preprocessing - Converting data/tutorial1/218_Olenna_Tyrell.txt Default log level in basicConfig is WARNING so the explicit parameter is not necessary but can be changed easily:

import logging

logging.basicConfig(format="%(levelname)s - %(name)s - %(message)s", level=logging.WARNING)

logging.getLogger("haystack").setLevel(logging.INFO)

Let’s download a csv containing some sample text and preprocess the data.

import pandas as pd

from haystack.utils import fetch_archive_from_http

# Download sample

doc_dir = "data/tutorial7/"

s3_url = "https://s3.eu-central-1.amazonaws.com/deepset.ai-farm-qa/datasets/small_generator_dataset.csv.zip"

fetch_archive_from_http(url=s3_url, output_dir=doc_dir)

# Create dataframe with columns "title" and "text"

df = pd.read_csv(f"{doc_dir}/small_generator_dataset.csv", sep=",")

# Minimal cleaning

df.fillna(value="", inplace=True)

print(df.head())

We can cast our data into Haystack Document objects. Alternatively, we can also just use dictionaries with “text” and “meta” fields

from haystack import Document

# Use data to initialize Document objects

titles = list(df["title"].values)

texts = list(df["text"].values)

documents = []

for title, text in zip(titles, texts):

documents.append(Document(content=text, meta={"name": title or ""}))

Here we initialize the FAISSDocumentStore, DensePassageRetriever and RAGenerator. FAISS is chosen here since it is optimized vector storage.

from haystack.document_stores import FAISSDocumentStore

from haystack.nodes import RAGenerator, DensePassageRetriever

# Initialize FAISS document store.

# Set `return_embedding` to `True`, so generator doesn't have to perform re-embedding

document_store = FAISSDocumentStore(faiss_index_factory_str="Flat", return_embedding=True)

# Initialize DPR Retriever to encode documents, encode question and query documents

retriever = DensePassageRetriever(

document_store=document_store,

query_embedding_model="facebook/dpr-question_encoder-single-nq-base",

passage_embedding_model="facebook/dpr-ctx_encoder-single-nq-base",

use_gpu=True,

embed_title=True,

)

# Initialize RAG Generator

generator = RAGenerator(

model_name_or_path="facebook/rag-token-nq",

use_gpu=True,

top_k=1,

max_length=200,

min_length=2,

embed_title=True,

num_beams=2,

)

We write documents to the DocumentStore, first by deleting any remaining documents then calling write_documents().

The update_embeddings() method uses the retriever to create an embedding for each document.

# Delete existing documents in documents store

document_store.delete_documents()

# Write documents to document store

document_store.write_documents(documents)

# Add documents embeddings to index

document_store.update_embeddings(retriever=retriever)

Here are our questions:

QUESTIONS = [

"who got the first nobel prize in physics",

"when is the next deadpool movie being released",

"which mode is used for short wave broadcast service",

"who is the owner of reading football club",

"when is the next scandal episode coming out",

"when is the last time the philadelphia won the superbowl",

"what is the most current adobe flash player version",

"how many episodes are there in dragon ball z",

"what is the first step in the evolution of the eye",

"where is gall bladder situated in human body",

"what is the main mineral in lithium batteries",

"who is the president of usa right now",

"where do the greasers live in the outsiders",

"panda is a national animal of which country",

"what is the name of manchester united stadium",

]

Now let’s run our system! The retriever will pick out a small subset of documents that it finds relevant. These are used to condition the generator as it generates the answer. What it should return then are novel text spans that form and answer to your question!

# Or alternatively use the Pipeline class

from haystack.pipelines import GenerativeQAPipeline

from haystack.utils import print_answers

pipe = GenerativeQAPipeline(generator=generator, retriever=retriever)

for question in QUESTIONS:

res = pipe.run(query=question, params={"Generator": {"top_k": 1}, "Retriever": {"top_k": 5}})

print_answers(res, details="minimum")

About us

This Haystack notebook was made with love by deepset in Berlin, Germany

We bring NLP to the industry via open source!

Our focus: Industry specific language models & large scale QA systems.

Some of our other work:

Get in touch: Twitter | LinkedIn | Discord | GitHub Discussions | Website

By the way: we’re hiring!